CSAT vs NPS vs CES: Which customer metric drives growth in 2026?

Sneha Arunachalam .

Jan 2026 .

CSAT vs NPS vs CES — if you’ve ever wondered which customer metric actually drives growth, you’re not alone.

Most teams track all three, but very few know which one truly matters when and why. The result? Plenty of data, very little direction.

Here’s the reality: companies that lead on customer experience grow revenue up to 2.5× faster than their competitors, yet many still rely on the wrong metric at the wrong moment.

In this guide, we break down CSAT vs NPS vs CES in plain terms, what each metric actually measures, how they’re calculated, where they fit in the customer journey, and which one has the strongest impact on retention, revenue, and repeat purchases.

By the end, you’ll know exactly which metric to prioritize, when to use it, and how to combine all three to turn customer feedback into real business growth.

What csat, nps, and ces actually measure

Think of it like this: if customer metrics were a doctor's toolkit, each one would diagnose a different part of your business health. Understanding what each metric actually measures helps you pick the right tool for the job.

CSAT: Satisfaction with a specific interaction

Customer Satisfaction Score (CSAT) is your instant pulse check. It measures how happy customers are with one specific moment — a support call, a product delivery, a checkout process.

The typical CSAT survey keeps it simple: "How satisfied were you with [product/service/interaction]?"

Most use a 1-5 scale where 5 means "very satisfied" and 1 means "very dissatisfied." Your score comes from taking only the good ratings (4-5), dividing by total responses, then multiplying by 100 for a percentage.

Here's a quick example: 80 customers give you a 4 or 5, and you got 100 total responses. Your CSAT would be 80%. Most businesses see scores like this:

- 90-100: Excellent

- 70-89: Good

- 50-69: Average

- Below 50: Poor

CSAT shines when you need to know how one specific interaction went. It's your early warning system — catching problems before they snowball into bigger loyalty issues.

Why CSAT only works when it’s captured in context

This is where many teams struggle with CSAT.

When feedback is delayed, collected out of context, or stored separately from the actual customer interaction, CSAT turns into a passive number instead of a useful signal.

A low score tells you something went wrong, but not where, why, or what to fix next.

For CSAT to drive improvement, it needs to be:

- Triggered immediately after the interaction

- Tied to the exact conversation or ticket

- Visible alongside agent actions and resolution details

- Easy to analyze without manual reporting

That’s why teams comparing CSAT vs NPS vs CES often realize the real challenge isn’t choosing the right metric, it’s having the right system to capture and act on it at the moment it matters.

Turning CSAT into action, not just a score

This is where modern support platforms come into play.

Tools like SparrowDesk are designed to collect CSAT automatically at the right touchpoints, link responses directly to support conversations, and surface patterns across agents, channels, and issue types.

Instead of reviewing scores in isolation, teams can see CSAT as part of the full support experience.

The result is a feedback loop that actually works:

- Low CSAT highlights broken interactions early

- Trends reveal coaching and process gaps

- Improvements show up in repeat scores over time

See how CSAT works best when it’s captured in context.

When CSAT is embedded into everyday support workflows, it stops being a vanity metric and becomes a practical tool for improving customer experience.

NPS: Overall loyalty and brand perception

Net Promoter Score (NPS) steps way back to look at the whole relationship. Instead of asking about one interaction, NPS asks the big question: would you actually recommend us to someone you care about?

The standard NPS question is: "On a scale of 0 to 10, how likely are you to recommend [company/product/service] to a friend or colleague?"

Based on their answer, customers fall into three buckets:

- Promoters (9-10): Your biggest fans who keep buying and bring friends

- Passives (7-8): Satisfied enough but might jump ship for something better

- Detractors (0-6): Unhappy customers who could hurt your reputation

To get your NPS, subtract the percentage of detractors from the percentage of promoters. Scores run from -100 to +100 — higher is better.

Companies with stronger NPS scores typically see better retention, higher lifetime value, and lower marketing costs.

CES: Ease of completing a task or resolving an issue

Customer Effort Score (CES) asks one simple question: how hard did we make your life?

Whether customers are trying to resolve an issue, find an answer, or complete a purchase, CES measures the effort they had to put in. The basic idea? People want to do business with companies that make things easy.

A typical CES survey asks:

"On a scale of 1 to 7, how easy was it to interact with [company name]?" or shows a statement like "The company made it easy for me to resolve my issue" for customers to rate.

You calculate CES by averaging all responses, higher scores mean less customer effort.

Here's what makes CES so powerful: 94% of customers with low-effort interactions plan to buy again, compared to only 4% of those who struggled through high-effort experiences.

CES helps you spot exactly where customers get stuck in your processes. It's particularly useful for fixing digital workflows, simplifying support interactions, and removing friction from your customer journey.

How each metric is calculated and interpreted

OK, time for some math, but don't worry, we're keeping this simple. Getting these calculations right matters because bad data leads to bad decisions.

CSAT formula: percentage of top ratings

CSAT uses the most straightforward calculation you'll find. Here's how it works:

- Count the number of positive responses (ratings of 4 and 5)

- Divide this number by the total responses received

- Multiply by 100 to convert to a percentage

CSAT Formula: (Number of positive responses ÷ Total number of responses) × 100

Say 170 out of 200 customers gave you a 4 or 5 — your CSAT would be 85%. That means 85% of people were satisfied with whatever specific thing you're measuring.

Most businesses call anything above 75% "good". But here's the thing — these benchmarks shift depending on your industry and what you've done before.

NPS formula: promoters minus detractors

NPS gets a bit trickier because you're sorting people into buckets first:

- Identify promoters (scores 9-10), passives (scores 7-8), and detractors (scores 0-6)

- Calculate the percentage of promoters and detractors

- Subtract the percentage of detractors from the percentage of promoters

NPS Formula: Percentage of promoters - Percentage of detractors

Think of it like this: if 80% of people are promoters and 10% are detractors, your NPS is 70. Unlike CSAT, you get a single number between -100 and +100, not a percentage.

Anything above 0 means more people like you than don't. Scores above 30? That's typically excellent. But again, this varies a lot by industry.

CES formula: average effort score

CES calculation depends on what scale you're using. The standard approach:

- Sum all individual customer effort ratings

- Divide by the total number of responses

CES Formula: Sum of all CES responses ÷ Total number of responses

Here's an example: 12 responses with scores of 3, 7, 5, 3, 7, 7, 6, 5, 7, 7, 7, and 7 gives you 71 ÷ 12 = 5.9.

Some teams use emoji scales instead. In that case, you subtract negative responses from positive ones:

Alternate CES Formula: Percentage of positive responses - Percentage of negative responses

With 250 positive and 50 negative responses out of 300 total:

- Positive percentage: (250 ÷ 300) × 100 = 83.33%

- Negative percentage: (50 ÷ 300) × 100 = 16.66%

- CES = 83.33% - 16.66% = 66.67%

Since everyone uses different scales, there's no universal "good" number for CES. Aim for the top 20% of whatever scale you pick. On a 1-5 scale, that means hitting at least a 4.

Each formula tells you something different about your customers. Put them together, and you get the full picture of what's working and what isn't.

When to use csat vs nps vs ces in the customer journey

Think of it like this: asking the right question at the wrong time is like trying to have a deep conversation during a fire drill. Timing matters a lot.

Each metric works best when your customers are in the right headspace to give you meaningful feedback. Get it wrong, and you're just collecting noise.

CSAT: Right after specific moments

CSAT works because it catches people while the experience is still fresh in their minds. Here's when it actually makes sense:

Right after support calls: Send that survey while they're still on the phone or within minutes of hanging up. They're already thinking about the interaction, so why wait?

24-48 hours after delivery: Give people just enough time to open the box and try things out, but not so long that they forget how smooth (or bumpy) the process was.

During onboarding: Catch problems before they turn into bigger issues. Someone struggling with setup today might become a detractor tomorrow.

After sales interactions: Your sales process is often the first real impression people get. Find out what's working and what isn't.

The key with CSAT? Strike while the iron's hot. It's built for those "How was that?" moments.

Where this gets real: acting on the score while it’s still fresh

Timing your CSAT survey is only half the job.

The bigger challenge is what happens next.

Can you trace a low score back to the exact conversation, agent action, and resolution path without digging through spreadsheets or disconnected tools?

That’s why teams often look for a support platform that doesn’t just collect CSAT, but makes it usable in context.

SparrowDesk captures CSAT at the right moments (like right after a ticket or chat closes) and ties the response directly to the conversation.

So you can see what triggered the score, spot patterns across agents and issue types, and fix the right things faster.

If you’re comparing CSAT vs NPS vs CES, this is the practical difference a tool can make: turning feedback into a workflow signal, not just a report.

See how CSAT works when it’s captured in context

NPS: When they've had time to form an opinion

NPS is different — it's asking people to think about your entire relationship, not just one interaction. That takes time.

After they've actually used your product: Don't ask someone if they'd recommend you before they've figured out what you do. Give them a few weeks minimum.

Every quarter or so: Regular check-ins work, but don't overdo it. Nobody wants to get surveyed every month about the same relationship.

Before renewal time: This one's smart. Ask a few months early so you can actually fix problems before they walk away.

After hitting big milestones: When someone's been with you for a year, expanded their account, or reached a usage threshold. These moments tell you if you're building something lasting.

Space these out — surveying the same person every few weeks just annoys them.

CES: After they've had to work for something

CES shines when people have just navigated your systems, solved a problem, or completed something that required effort.

Right after support tickets close: Did you make their life easier or harder? They'll know immediately.

After self-service interactions: Your website, app, or knowledge base either helped them or frustrated them. Find out which.

Following complex purchases: Multi-step checkouts, enterprise sales, onboarding processes. Anywhere they had to jump through hoops.

After any multi-step process: Returns, account changes, setup procedures. If it took more than a few clicks, measure the effort.

Remember what we said earlier — 94% of people who find you easy to work with will buy again. CES tells you exactly where you're making things harder than they need to be.

The bottom line? Each metric has its moment. Use them strategically, and you'll get insights that actually help you improve things.

CSAT vs NPS vs CES: Benefits and limitations of each metric

Here's the thing about customer metrics — they're all a bit like tools in a toolbox. Each one's brilliant for certain jobs, but try using a hammer when you need a screwdriver, and you'll make a mess.

CSAT: quick feedback but limited scope

CSAT is your instant gratification metric.

You get clear, easy-to-read numbers that tell you exactly how that last interaction went. It's the closest thing to a real-time temperature check you'll get from your customers, and honestly, that simplicity is gold.

One CSAT question can tell you more than a lengthy survey that nobody wants to fill out.

Customers actually like answering CSAT surveys because they're quick and straightforward.

Think of it as the "How was your meal?" question at a restaurant — simple, direct, and actionable.

But here's where CSAT gets tricky.

It's basically capturing a snapshot of someone's mood right after an interaction. If your customer just had a terrible morning, that might show up in their score even if your service was perfect.

Plus, what counts as "satisfied" varies wildly depending on where people are from, what sounds "great" to someone in Australia might sound lukewarm to someone in the UK.

That’s why CSAT works best when it isn’t viewed in isolation.

A single score doesn’t tell the whole story unless it’s captured at the right moment and tied directly to the interaction behind it.

When CSAT responses are linked to the exact ticket, channel, and agent involved, teams can separate genuine service issues from momentary sentiment and spot patterns that actually matter.

Modern support teams use platforms like SparrowDesk to collect CSAT immediately after support conversations, automatically connect feedback to the underlying context, and surface trends across agents, channels, and issue types.

Instead of reacting to individual scores, teams can focus on fixing repeat problems, improving consistency, and coaching with confidence.

When CSAT is grounded in context, it stops being a mood indicator and starts becoming a reliable signal for improving customer experience.

Turn CSAT scores into actionable support insights

NPS: strategic insight but lacks context

NPS is the metric that gets executives excited because it actually connects to business growth.

When nearly three-quarters of CX leaders use it , you know it's doing something right. It's perfect for those boardroom conversations about where you stand against competitors.

The beauty of NPS? It's dead simple.

Two questions max, and even your sales team can understand what it means when customers say they'd recommend you. NPS creates this company-wide rallying cry around making customers happy enough to tell their friends.

But — and this is a big but — collecting NPS scores won't magically fix your problems.

You've got to dig into the why, figure out what to change, and actually do something about it. Too many companies treat NPS like a vanity metric instead of a diagnostic tool.

When your NPS drops, it doesn't tell you whether the issue is your product, your support, or your pricing. Some researchers even question whether NPS is really the growth predictor it's cracked up to be.

CES: great for reducing friction but narrow focus

CES is your early warning system for customer churn. It spots the friction that drives people away before they even complain. When you see high effort scores, you know exactly where your process is breaking down.

What makes CES special is how well it predicts what customers will do next. Remember that 94% versus 4% repurchase stat? That's CES showing its true power. It's like having a crystal ball for customer retention.

The downside? CES only looks at one piece of the puzzle. A customer might breeze through your checkout process but still hate your product. And here's the kicker, what feels "effortless" to a tech-savvy 25-year-old might feel impossible to someone who's less comfortable with digital tools.

Each metric has its moment to shine, but none of them tells the whole story on its own.

Quick comparison: CSAT vs NPS vs CES - benefits & limitations of each metric

Metric | What it does well | Where it falls short |

|---|---|---|

CSAT | • Delivers quick, real-time feedback • Simple and easy for customers to answer • Great for evaluating specific interactions | • Heavily influenced by mood and timing • Limited to individual touchpoints • “Satisfied” means different things across cultures |

NPS | • Strong signal of loyalty and growth potential • Easy to understand across teams and leadership • Useful for benchmarking against competitors | • Lacks context on why scores change • Doesn’t point to specific fixes • Often misused as a vanity metric |

CES | • Excellent at identifying friction points • Strong predictor of churn and repeat purchase • Directly tied to ease of doing business | • Focuses only on effort, not overall sentiment • Misses product or brand perception • “Ease” varies by user capability and expectations |

Can you use csat, nps, and ces together?

Here's the thing everyone gets wrong about NPS vs CSAT vs CES — they're not fighting each other for your attention. Think of them more like a three-person team where each player has a specific role. Together, they give you a complete picture that no single metric could ever show you.

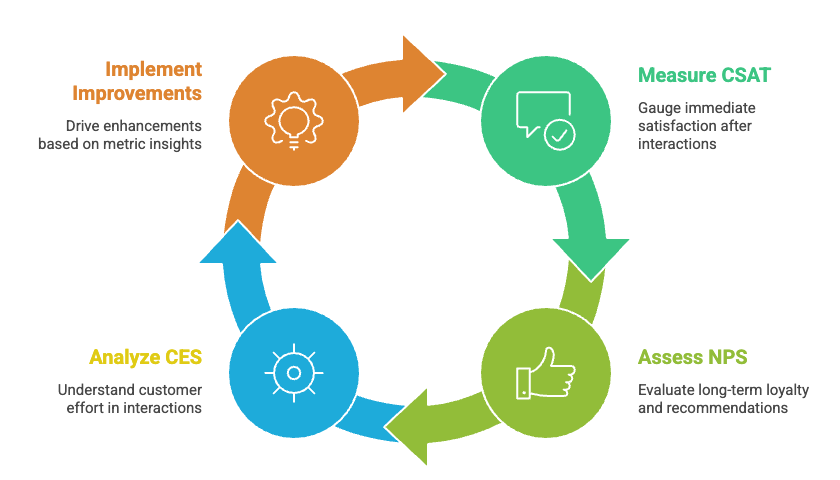

Layering metrics for a full CX picture

These metrics naturally stack on top of each other, like building blocks:

- Agent-level: CSAT shows how happy people are with specific moments

- Process-level: CES reveals where you're making things too hard

- Company-level: NPS tells you about the overall relationship

This layered approach gives you that full 360-degree view of what's really happening. Let's say you run an e-commerce site. Your customers might love your product selection (high CSAT), but your checkout process is driving them crazy (low CES). Eventually, that friction shows up in lower NPS scores because they won't recommend you to friends.

How CSAT and CES support NPS improvements

When your NPS suddenly drops, CSAT and CES become your detective tools. Research shows that NPS changes often trace back to specific touchpoint problems you can spot in CSAT data.

Here's how this plays out: Your quarterly NPS tanks, and you're scrambling to figure out why. A quick look at recent CSAT scores might show that your chat support is falling apart. Meanwhile, CES data could reveal that your new return process is way too complicated.

Now you've got a clear action plan:

- NPS spotted the overall problem

- CSAT pinpointed the broken touchpoint

- CES highlighted exactly where the friction lives

Avoiding data overload with clear goals

But here's where things get messy — using multiple metrics can quickly turn into a data nightmare.

To keep things manageable, stick to these basics:

- Give each metric a specific job

- Make sure everyone on your team understands what each one does

- Weight them based on what actually matters to your business

Some companies create customer health scores using formulas like: Health Score = (0.5 * CES) + (0.3 * CSAT) + (0.2 * NPS). That might work for them, but you'll need to figure out what makes sense for your situation.

Bottom line — simplicity wins. Without clear goals, all that data just becomes noise, and you'll struggle to actually do anything useful with it.

which metric actually drives growth?

Think of it like this: if you're running a business, you want to know which numbers actually move revenue. Not all customer metrics pack the same punch when it comes to your bottom line.

NPS as a predictor of revenue and loyalty

The numbers don't lie with NPS. Companies with the highest Net Promoter Scores in their industries grow revenue 2.5 times faster than competitors. That's not a coincidence — NPS explains about 20% to 60% of the difference in how fast companies grow compared to their competition.

Here's what the research shows:

- Bump your NPS up by 10+ points, and you'll see upsell revenue jump 3.2%

- The London School of Economics found that a 7% NPS increase equals 1% more overall revenue

- Industry NPS leaders consistently grow twice as fast as everyone else

Companies that actually use NPS right — running surveys regularly and fixing what customers complain about — see customer retention go up by 8.5%.

CES as a churn-reduction tool

CES might be the best crystal ball you've got for predicting customer behavior. Remember that stat we mentioned earlier? 94% of customers who find you easy to work with plan to buy again, compared to only 4% who struggle through high-effort interactions.

The churn prevention power is real:

- High-effort experiences predict customers will leave, even if they don't complain right away

- Cut the effort, and repurchase intent can jump up to 94%

- Make things hard for customers, and they're 96% more likely to become disloyal

CES focuses on convenience over happiness, but here's the thing — effort often predicts repeat business better than other metrics.

CSAT as a performance improvement signal

CSAT might not get as much attention in growth conversations, but it delivers real money. Harvard Business Review found that customers with great past experiences spend 140% more than those who had terrible ones.

CSAT works because it helps you fix problems fast:

- It connects customer feedback directly to things you can actually improve [unspecified]

- Better CSAT scores can help you keep 74% of customers for another year

- As an early warning system, CSAT catches issues before they tank your other metrics

Bottom line? NPS predicts long-term growth, CES stops customers from leaving, and CSAT gives you immediate fixes. The smartest companies don't pick just one — they use all three to get the full picture.

This is where having the right system matters as much as choosing the right metric.

CSAT only drives performance when it’s tightly connected to day-to-day support work. If scores live in spreadsheets or disconnected dashboards, teams spot issues too late or miss the context needed to fix them.

SparrowDesk is built to close that gap. CSAT is captured automatically at key moments, linked directly to the exact ticket, agent actions, and resolution path behind each score.

Teams don’t just see what the score is they understand why it moved.

Turn CSAT into a signal your team can actually act on

That makes CSAT practical instead of reactive:

- Managers can identify coaching gaps before they impact NPS

- Teams can fix broken workflows while the experience is still fresh

- Improvements show up quickly across repeat interactions

When CSAT is embedded into everyday support workflows, it becomes what it’s meant to be: a real-time performance signal that helps teams improve continuously—without waiting for quarterly loyalty metrics to catch up.

Here's how they stack up: CSAT vs NPS vs CES

Think of this like a cheat sheet — everything you need to know about CSAT, NPS, and CES in one place. No fluff, just the facts that actually matter for your business.

Aspect | CSAT | NPS | CES |

Measures | Satisfaction with specific interactions | Overall loyalty and likelihood to recommend | Effort required to complete tasks/resolve issues |

Calculation | (Positive responses ÷ Total responses) × 100 | % Promoters - % Detractors | Average of all effort ratings |

Score Range | 0-100% (90-100%: Excellent, 70-89%: Good) | -100 to +100 (>30 considered excellent) | Typically 1-7 scale (higher is better) |

Best Timing | - After support calls | - Post-onboarding | - After support resolutions |

Key Benefits | - Quick feedback | - Predicts business growth | - Strong predictor of repurchase |

Limitations | - Measures only immediate sentiment | - Lacks context for improvements | - Narrow focus on effort only |

Growth Impact | 140% more spending from customers with best experiences | 2.5x faster revenue growth for companies with highest scores | 94% repurchase intent with low-effort experiences |

The bottom line? Each metric tells you something different, but they're all pointing toward the same goal — keeping customers happy enough to stick around and spend more money.

Conclusion

Here's what we know after looking at all the data, no single metric is going to tell you everything about your customers. CSAT gives you those quick snapshots of specific moments. NPS shows you the bigger loyalty picture. CES reveals exactly where people are getting stuck.

The numbers don't lie about their impact.

Companies crushing it with NPS scores grow revenue 2.5 times faster than everyone else. Get your CES right, and 94% of customers come back for more.

CSAT improvements?

They turn into customers who spend 140% more than the frustrated ones.

But here's the thing — you don't have to pick sides in this CSAT vs NPS vs CES debate.

Smart companies use all three strategically. They drop CSAT surveys right after key interactions, measure CES when processes get complex, and check NPS at those big relationship moments. This approach gives you the full picture without drowning in data.

Your specific goals should drive which one gets priority.

- Trying to stop customers from leaving? CES is your best friend.

- Want to predict where your revenue's heading? NPS has your back.

- Need immediate fixes for what's broken right now? CSAT delivers those answers fast.

The real magic happens when you stop treating these as competing metrics and start seeing them as different lenses on the same customer relationship.

Each one reveals something the others miss. Together, they create a measurement system that actually helps you make your customer experience better not just track it.

This is exactly where the right support platform makes the difference.

SparrowDesk is built for teams that don’t want to just measure CSAT, NPS, and CES—but actually use them to improve customer experience in real time.

CSAT is captured immediately after conversations, tied directly to tickets and agents.

CES highlights friction right where customers struggle. And NPS gives you a clear pulse on long-term loyalty—all without juggling multiple tools or spreadsheets.

Instead of treating feedback as disconnected scores, SparrowDesk brings these signals into everyday support workflows, so teams can spot issues early, coach effectively, and improve experiences before customers churn.

When customer metrics are collected at the right moment and connected to real actions, they stop being numbers on a dashboard and start driving meaningful growth.

See how modern teams turn CSAT into action

Key takeaways

Understanding the distinct roles of CSAT, NPS, and CES helps you choose the right metric for specific business goals and customer journey touchpoints.

• Use metrics strategically by timing: Deploy CSAT after specific interactions, NPS at relationship milestones, and CES after complex processes for maximum insight value.

• NPS drives long-term growth: Companies with highest NPS scores grow revenue 2.5 times faster than competitors, making it the strongest predictor of business expansion.

• CES prevents customer churn: 94% of customers with low-effort experiences intend to repurchase versus only 4% experiencing high-effort interactions.

• Layer all three metrics together: Rather than choosing one, successful companies use CSAT for immediate feedback, CES for friction identification, and NPS for loyalty prediction.

• Focus on actionable improvements: Collecting data means nothing without analyzing drivers, designing changes, and implementing solutions based on metric insights.

The most effective customer experience strategy doesn't rely on a single metric but creates a comprehensive measurement system that captures satisfaction, effort, and loyalty across the entire customer journey.

Frequently Asked Questions

MORE LIKE THIS

Support made easy. So your team can breathe.